Why Ruby may be the best language to write your next AI web application

📗 This is the second in a multi-part series on creating web applications with generative AI integration. Part 1 focused on explaining the AI stack and why the application layer is the best place in the stack to be. Check it out here.

Table of Contents

- Introduction

- I thought SPAs were supposed to be relaxing?

- Microservices are for Macrocompanies

- Ruby and Python: Two Sides of the Same Coin

- Recent AI-based Gems

- Summary

Introduction

It’s not often that you hear the Ruby language mentioned when discussing AI.

Python, of course, is the king in this world, and for good reason. The community has coalesced around the language. Most model training is done in PyTorch or TensorFlow these days. Scikit-learn and Keras are also very popular. RAG frameworks such as LangChain and LlamaIndex cater primarily to Python.

However, when it comes to building web applications with AI integration, I believe Ruby is the better language.

As the co-founder of an agency dedicated to building MVPs with generative AI integration, I frequently hear potential clients complaining about two things:

- Applications take too long to build

- Developers are quoting insane prices to build custom web apps

These complaints have a common source: complexity. Modern web apps have a lot more complexity in them than in the good ol’ days. But why is this? Are the benefits brought by complexity worth the cost?

I thought SPAs were supposed to be relaxing?

One big piece of the puzzle is the recent rise of single-page applications (SPAs). The most popular stack used today in building modern SPAs is MERN (MongoDB, Express.js, React.js, Node.js). The stack is popular for a few reasons:

- It is a JavaScript-only stack across both front-end and back-end. Having to code in only one language is pretty nice!

- SPAs can offer dynamic designs and a “smooth” user experience. Smooth here means that when some piece of data changes, only a part of the site is updated, as opposed to having to reload the whole page. Of course, if you don’t have a modern smartphone, SPAs won’t feel so smooth, as they tend to be pretty heavy. All that JavaScript starts to drag down the performance.

- There is a large ecosystem of libraries and developers with experience in this stack. This is pretty circular logic: is the stack popular because of the ecosystem, or is there an ecosystem because of the popularity? Either way, this point stands.

- React was created by Meta. Lots of money and effort has been thrown at the library, helping to polish and promote the product.

Unfortunately, there are some downsides of working in the MERN stack, the most critical being the sheer complexity.

Traditional web development was done using the Model-View-Controller (MVC) paradigm. In MVC, all of the logic managing a user’s session is handled in the backend, on the server. Something like fetching a user’s data was done via function calls and SQL statements in the backend. The backend then serves fully built HTML and CSS to the browser, which just has to display it. Hence the name “server”.

In a SPA, this logic is handled on the user’s browser, in the frontend. SPAs have to handle UI state, application state, and sometimes even server state all in the browser. API calls have to be made to the backend to fetch user data. There is still quite a bit of logic on the backend, mainly exposing data and functionality through APIs.

To illustrate the difference, let me use the analogy of a commercial kitchen. The customer will be the frontend and the kitchen will be the backend.

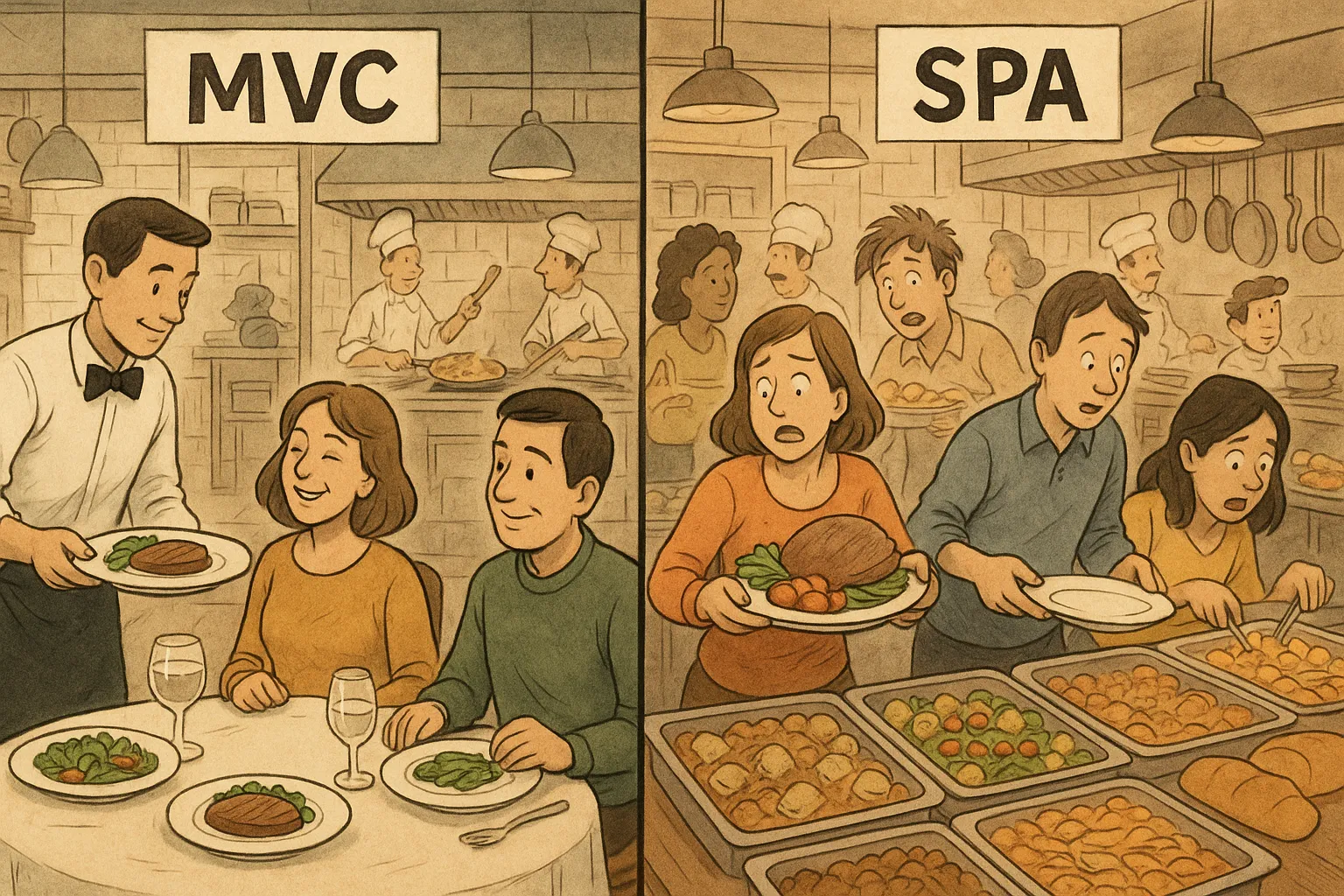

MVCs vs. SPAs. Image generated by ChatGPT.

Traditional MVC apps are like dining at a full-service restaurant. Yes, there is a lot of complexity (and yelling, if The Bear is to be believed) in the backend. But the frontend experience is simple and satisfying: all the customer has to do is pick up a fork and eat their food.

SPAs are like eating at a buffet-style dining restaurant. There is still quite a bit of complexity in the kitchen. But now the customer also has to decide what food to grab, how to combine them, how to arrange them on the plate, where to put the plate when finished, etc.

Andrej Karpathy had a tweet recently discussing his frustration with attempting to build web apps in 2025. It can be overwhelming for those new to the space.

In order to build MVPs with AI integration rapidly, our agency has decided to forgo the SPA and instead go with the traditional MVC approach. In particular, we have found Ruby on Rails (often denoted as Rails) to be the framework best suited to quickly developing and deploying quality apps with AI integration. Ruby on Rails was developed by David Heinemeier Hansson in 2004 and has long been known as a great web framework, but I would argue it has recently made leaps in its ability to incorporate AI into apps, as we will see.

Django is the most popular Python web framework, and also has a more traditional pattern of development. Unfortunately, in our testing we found Django was simply not as full-featured or “batteries included” as Rails is. As a simple example, Django has no built-in background job system. Nearly all of our apps incorporate background jobs, so to not include this was disappointing. We also prefer how Rails emphasizes simplicity, with Rails 8 encouraging developers to easily self-host their apps instead of going through a provider like Heroku. They also recently released a stack of tools meant to replace external services like Redis.

“But what about the smooth user experience?” you might ask. The truth is that modern Rails includes several ways of crafting SPA-like experiences without all of the heavy JavaScript. The primary tool is Hotwire, which bundles tools like Turbo and Stimulus. Turbo lets you dynamically change pieces of HTML on your webpage without writing custom JavaScript. For the times where you do need to include custom JavaScript, Stimulus is a minimal JavaScript framework that lets you do just that. Even if you want to use React, you can do so with the react-rails gem. So you can have your cake, and eat it too!

SPAs are not the only reason for the increase in complexity, however. Another has to do with the advent of the microservices architecture.

Microservices are for Macrocompanies

Once again, we find ourselves comparing the simple past with the complexity of today.

In the past, software was primarily developed as monoliths. A monolithic application means that all the different parts of your app — such as the user interface, business logic, and data handling — are developed, tested, and deployed as one single unit. The code is all typically housed in a single repo.

Working with a monolith is simple and satisfying. Running a development setup for testing purposes is easy. You are working with a single database schema containing all of your tables, making queries and joins straightforward. Deployment is simple, since you just have one container to look at and modify.

However, once your company scales to the size of a Google or Amazon, real problems begin to emerge. With hundreds or thousands of developers contributing simultaneously to a single codebase, coordinating changes and managing merge conflicts becomes increasingly difficult. Deployments also become more complex and risky, since even minor changes can blow up the entire application!

To manage these issues, large companies began to coalesce around the microservices architecture. This is a style of programming where you design your codebase as a set of small, autonomous services. Each service owns its own codebase, data storage, and deployment pipelines. As a simple example, instead of stuffing all of your logic regarding an OpenAI client into your main app, you can move that logic into its own service. To call that service, you would then typically make REST calls, as opposed to function calls. This ups the complexity, but resolves the merge conflict and deployment issues, since each team in the organization gets to work on their own island of code.

Another benefit to using microservices is that they allow for a polyglot tech stack. This means that each team can code up their service using whatever language they prefer. If one team prefers JavaScript while another likes Python, this is no issue. When we first began our agency, this idea of a polyglot stack pushed us to use a microservices architecture. Not because we had a large team, but because we each wanted to use the “best” language for each functionality. This meant:

- Using Ruby on Rails for web development. It’s been battle-tested in this area for decades.

- Using Python for the AI integration, perhaps deployed with something like FastAPI. Serious AI work requires Python, I was led to believe.

Two different languages, each focused on its area of specialty. What could go wrong?

Unfortunately, we found the process of development frustrating. Just setting up our dev environment was time-consuming. Having to wrangle Docker compose files and manage inter-service communication made us wish we could go back to the beauty and simplicity of the monolith. Having to make a REST call and set up the appropriate routing in FastAPI instead of making a simple function call sucked.

“Surely we can’t develop AI apps in pure Ruby,” I thought. And then I gave it a try.

And I’m glad I did.

I found the process of developing an MVP with AI integration in Ruby very satisfying. We were able to sprint where before we were jogging. I loved the emphasis on beauty, simplicity, and developer happiness in the Ruby community. And I found the state of the AI ecosystem in Ruby to be surprisingly mature and getting better every day.

If you are a Python programmer and are scared off by learning a new language like I was, let me comfort you by discussing the similarities between the Ruby and Python languages.

Ruby and Python: Two Sides of the Same Coin

I consider Python and Ruby to be like cousins. Both languages incorporate:

- High-level Interpretation: This means they abstract away a lot of the complexity of low-level programming details, such as memory management.

- Dynamic Typing: Neither language requires you to specify if a variable is an

int,float,string, etc. The types are checked at runtime. - Object-Oriented Programming: Both languages are object-oriented. Both support classes, inheritance, polymorphism, etc. Ruby is more “pure”, in the sense that literally everything is an object, whereas in Python a few things (such as

ifandforstatements) are not objects. - Readable and Concise Syntax: Both are considered easy to learn. Either is great for a first-time learner.

- Wide Ecosystem of Packages: Packages to do all sorts of cool things are available in both languages. In Python they are called libraries, and in Ruby they are called gems.

The primary difference between the two languages lies in their philosophy and design principles. Python’s core philosophy can be described as:

There should be one — and preferably only one — obvious way to do something.

In theory, this should emphasize simplicity, readability, and clarity. Ruby’s philosophy can be described as:

There’s always more than one way to do something. Maximize developer happiness.

This was a shock to me when I switched over from Python. Check out this simple example emphasizing this philosophical difference:

# A fight over philosophy: iterating over an array

# Pythonic way

for i in range(1, 6):

print(i)

# Ruby way, option 1

(1..5).each do |i|

puts i

end

# Ruby way, option 2

for i in 1..5

puts i

end

# Ruby way, option 3

5.times do |i|

puts i + 1

end

# Ruby way, option 4

(1..5).each { |i| puts i }Another difference between the two is syntax style. Python primarily uses indentation to denote code blocks, while Ruby uses do…end or {…} blocks. Most include indentation inside Ruby blocks, but this is entirely optional. Examples of these syntactic differences can be seen in the code shown above.

There are a lot of other little differences to learn. For example, in Python string interpolation is done using f-strings: f"Hello, {name}!", while in Ruby they are done using hashtags: "Hello, #{name}!". Within a few months, I think any competent Python programmer can transfer their proficiency over to Ruby.

Recent AI-based Gems

Despite not being in the conversation when discussing AI, Ruby has had some recent advancements in the world of gems. I will highlight some of the most impressive recent releases that we have been using in our agency to build AI apps:

RubyLLM (link) — Any GitHub repo that gets more than 2k stars within a few weeks of release deserves a mention, and RubyLLM is definitely worthy. I have used many clunky implementations of LLM providers from libraries like LangChain and LlamaIndex, so using RubyLLM was like a breath of fresh air. As a simple example, let’s take a look at a tutorial demonstrating multi-turn conversations:

require 'ruby_llm'

# Create a model and give it instructions

chat = RubyLLM.chat

chat.with_instructions "You are a friendly Ruby expert who loves to help beginners."

# Multi-turn conversation

chat.ask "Hi! What does attr_reader do in Ruby?"

# => "Ruby creates a getter method for each symbol...

# Stream responses in real time

chat.ask "Could you give me a short example?" do |chunk|

print chunk.content

end

# => "Sure!

# ```ruby

# class Person

# attr...Simply amazing. Multi-turn conversations are handled automatically for you. Streaming is a breeze. Compare this to a similar implementation in LangChain:

from langchain_openai import ChatOpenAI

from langchain_core.schema import SystemMessage, HumanMessage, AIMessage

from langchain_core.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

SYSTEM_PROMPT = "You are a friendly Ruby expert who loves to help beginners."

chat = ChatOpenAI(streaming=True, callbacks=[StreamingStdOutCallbackHandler()])

history = [SystemMessage(content=SYSTEM_PROMPT)]

def ask(user_text: str) -> None:

"""Stream the answer token-by-token and keep the context in memory."""

history.append(HumanMessage(content=user_text))

# .stream yields message chunks as they arrive

for chunk in chat.stream(history):

print(chunk.content, end="", flush=True)

print() # newline after the answer

# the final chunk has the full message content

history.append(AIMessage(content=chunk.content))

ask("Hi! What does attr_reader do in Ruby?")

ask("Great - could you show a short example with attr_accessor?")Yikes. And it’s important to note that this is a grug implementation. Want to know how LangChain really expects you to manage memory? Check out these links, but grab a bucket first; you may get sick.

Neighbors (link) — This is an excellent library to use for nearest-neighbors search in a Rails application. Very useful in a RAG setup. It integrates with Postgres, SQLite, MySQL, MariaDB, and more. It was written by Andrew Kane, the same guy who wrote the pgvector extension that allows Postgres to behave as a vector database.

Async (link) — This gem had its first official release back in December 2024, and it has been making waves in the Ruby community. Async is a fiber-based framework for Ruby that runs non-blocking I/O tasks concurrently while letting you write simple, sequential code. Fibers are like mini-threads that each have their own mini call stack. While not strictly a gem for AI, it has helped us create features like web scrapers that run blazingly fast across thousands of pages. We have also used it to handle streaming of chunks from LLMs.

Torch.rb (link) — If you are interested in training deep learning models, then surely you have heard of PyTorch. Well, PyTorch is built on LibTorch, which essentially has a lot of C/C++ code under the hood to perform ML operations quickly. Andrew Kane took LibTorch and made a Ruby adapter over it to create Torch.rb, essentially a Ruby version of PyTorch. Andrew Kane has been a hero in the Ruby AI world, authoring dozens of ML gems for Ruby.

Summary

In short: building a web application with AI integration quickly and cheaply requires a monolithic architecture. A monolith demands a monolingual application, which is necessary if your end goal is quality apps delivered with speed. Your main options are either Python or Ruby. If you go with Python, you will probably use Django for your web framework. If you go with Ruby, you will be using Ruby on Rails. At our agency, we found Django’s lack of features disappointing. Rails has impressed us with its feature set and emphasis on simplicity. We were thrilled to find almost no issues on the AI side.

Of course, there are times where you will not want to use Ruby. If you are conducting research in AI or training machine learning models from scratch, then you will likely want to stick with Python. Research almost never involves building web applications. At most you’ll build a simple interface or dashboard in a notebook, but nothing production-ready. You’ll likely want the latest PyTorch updates to ensure your training runs quickly. You may even dive into low-level C/C++ programming to squeeze as much performance as you can out of your hardware. Maybe you’ll even try your hand at Mojo.

But if your goal is to integrate the latest LLMs — either open or closed source — into web applications, then we believe Ruby to be the far superior option. Give it a shot yourselves!

In part three of this series, I will dive into a fun experiment: just how simple can we make a web application with AI integration? Stay tuned.